Event-driven systems made easy with AWS Lambda

In a world of expanding volumes of information, of growing demand for fast, frequent transactions, it’s crucial that systems process data as it becomes available. This is why the event-driven design pattern (an architectural pattern built around the production, detection, consumption of, and reaction to, events) has gained popularity over the past few years.

As we have often seen with our customers, deploying and maintaining an event-driven architecture is relatively difficult and nearly always requires custom development. Thankfully, a new service from Amazon called Lambda will dramatically lower the barriers for building event-driven systems. When coupled with FME technology, this gives you an extremely powerful set of tools for the elastic processing and transformation of hundreds of supported datasets.

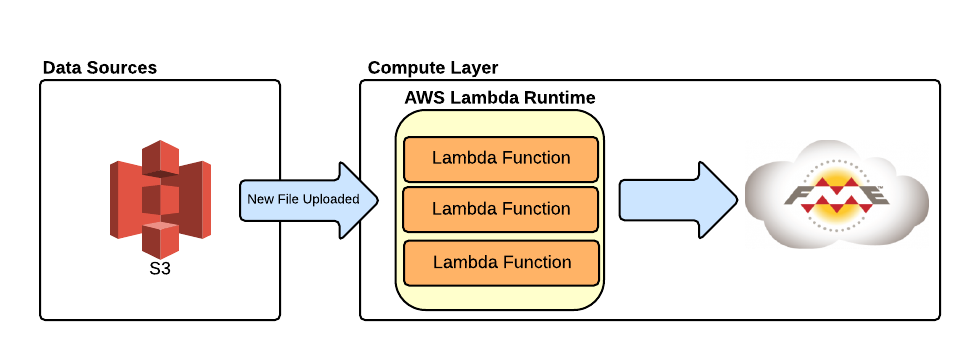

The Lambda service sits between the data and the compute layer in AWS. It allows developers to build dynamic event-driven applications by providing an interface to upload Node.js code then set triggers in AWS to run the code. The triggers can currently be set on Amazon S3, Amazon Kinesis, and Amazon Dynamo DB services. As to be expected, Lambda scales as the events scale, so costs are low and performance/reliability high.

Let’s walk through a scenario for setting up an event-driven system. We’ll focus on processing data that comes into Amazon S3 buckets, as this is the most popular workflow we have seen here at Safe Software.

Scenario overview: Amazon S3 triggers

Below is a high-level overview of the workflow we will implement. This is suitable for any FME workflow that has a file-based input. It is particularly suited if you wish to automate your workflow and if the workflow is infrequent (e.g. daily update of web mapping tiles, bulk processing of sensor data, or loading LiDAR data into a database).

The key difference when compared to normal FME workflows is that you are provisioning FME capacity on demand. Since you only need to pay for the FME compute time that you use, you can scale up dramatically for a short period of time to undertake the processing. The result: you pay less for more compute power and get the processing done in a much shorter period of time.

Workflow implementation: Linking AWS components

Let’s take a more in-depth look at the steps required to implement this workflow.

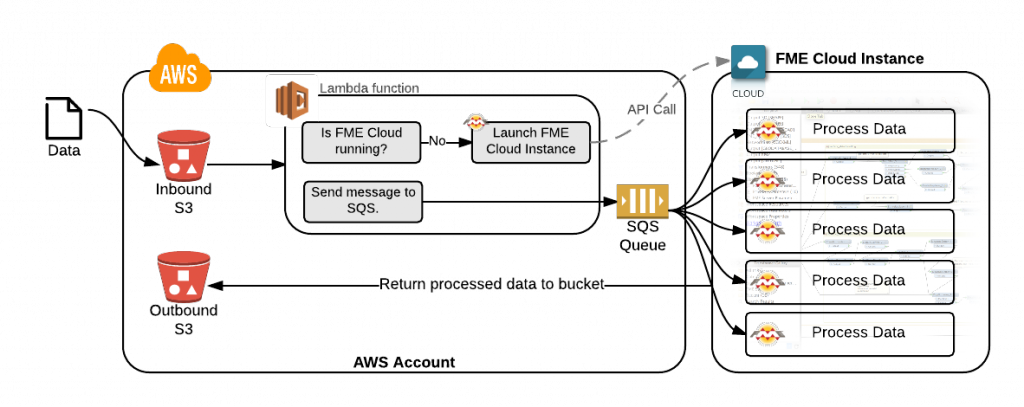

Step 1: File is placed in Inbound S3 bucket.

Step 2: Notification fires on the S3 bucket and sends an event to a Lambda function.

Step 3: Lambda function (JavaScript code) checks to see if the FME Cloud instance is running; if not, it launches it. It also sends a message with details of the event to the SQS queue.

Step 4: The FME Cloud instance (once online) automatically begins pulling SQS messages from the queue. The SQS message contains the location of the data on S3, which FME uses to download and process the data. On completion the processed data is sent to the outbound S3 bucket.

Step by step: Putting together AWS Lambda, S3, and FME Cloud

Set up AWS S3 buckets

Follow the tutorial here to set up your AWS bucket. This walks you through creating the buckets, setting up IAM, and configuring S3 to publish events.

Set up Lambda Code

Below is the Lambda code to provision FME Cloud instances and send messages to a SQS queue. You will need to update the QUEUE_URL, <FME Cloud Path>, and <FME Cloud token>.

console.log('Loading event');

//Required for SQS

var QUEUE_URL = 'https://sqs.us-east-1.amazonaws.com/441807460849/lambda-processing-s3';

var AWS = require('aws-sdk');

var sqs = new AWS.SQS({region : 'us-east-1'});

var http = require('https');

exports.handler = function(event, context) {

//Send SQS message with details of file uploaded to S3.

var params = {

MessageBody: JSON.stringify(event),

QueueUrl: QUEUE_URL

};

sqs.sendMessage(params, function(err,data){

if(err) {

console.log('error:',"Fail Send Message" + err);

context.done('error', "ERROR Put SQS"); // ERROR with message

}else{

console.log('data:',data.MessageId);

context.done(null,''); // SUCCESS

}

});

// Retrieve current state of instance to see if it is PAUSED.

var options = {

host: 'api.fmecloud.safe.com',

port: 443,

path: '/v1/instances/<FME Cloud Path>.json',

headers: {'Authorization': 'Bearer <FME Cloud Token>'},

method: 'GET'

};

var req = http.request(options, function(response){

console.log(response.statusCode + ':- Instance state response code.');

response.on('data', function(d) {

var jsonObj = JSON.parse(d);

if (jsonObj.state === 'PAUSED'){

//If it is paused then launch the instance

launchInstance();

}else{

console.log('Instance already running.');

}

});

});

req.end();

};

// Launch instance if it is not paused.

function launchInstance(){

var options = {

host: 'api.fmecloud.safe.com',

port: 443,

path: '/v1/instances/<FME Cloud Path>/start',

headers: {'Authorization': 'Bearer <FME Cloud Token>', 'Content-Length': 0},

method: 'PUT'

};

var req = http.request(options, function(response) {

console.log(response.statusCode + ':- Launch instance status response code.');

});

req.end();

}

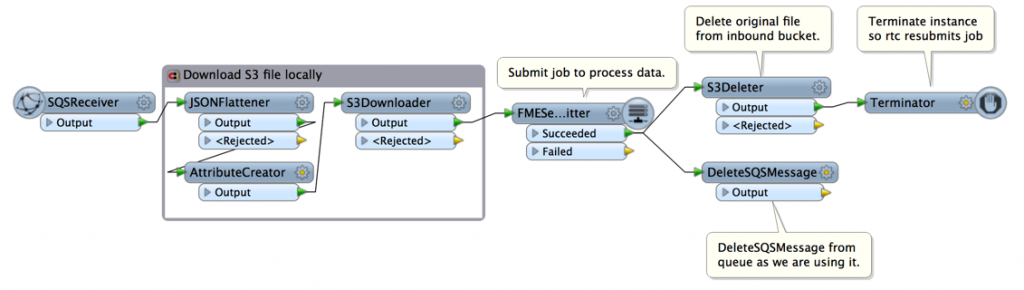

Configure FME Cloud workflows

When the FME Cloud instance comes online, an FME workspace is configured on each engine to pull messages from the SQS queue, download the data from S3, process the data, and then send the data back to S3.

The workspace is available to download here, but if you are interested in the scenario please contact support and we will be more than happy to help.

How much does it cost?

Let’s run a quick cost calculation. Say we have 120GB of data a day coming in and it takes about 40 hours of FME Engine compute time to process the data.

AWS Costs

S3 Storage Costs – $0.03 per GB

120GB of data is stored in the inbound bucket for 4 hours a day:-

120GB * (4 hours/24 hours) * 0.03 = $0.60

500 GB of data is always stored in the outbound bucket:-

500GB * 0.03 = $15.00

Lambda Costs (Free) – First 1 million requests per month are free

SQS Costs (Free) – First 1 million Amazon SQS Requests per month are free

Total AWS Costs : $15.60 a month.

FME Cloud Costs

Forty hours of FME Engine processing time is needed a day. Fourteen hours of FME Engine compute per hour can be delivered by the 16 core Enterprise instance. Three hours of the Enterprise instance therefore delivers 42 compute hours.

Compute time: 3 hours * $8 * 31 days = $744 a month

Outbound traffic: 20GB * $0.13 * 31 = $80.60

Total FME Cloud Costs: $824.60 a month

The total cost to process over 3.6TB of data a month is only $840. That not only gives you the software and the hardware, but also a fully monitored and maintained platform that is completely automated. Only two years ago this would have been unthinkable.

Event-driven workflows moving forward

The ability to essentially create micro-services using Lambda, to glue together different architectural components without having to worry about infrastructure, is going to have a dramatic effect on how we design applications. This workflow is merely a taste of what’s possible with FME Cloud, demonstrating the flexibility of Lambda and the power of wrapping APIs around infrastructure on the FME Cloud side.

Have you had experience with deploying an event-driven architecture? How do you plan on using AWS Lambda?