National Broadband Map: The Data and Technology

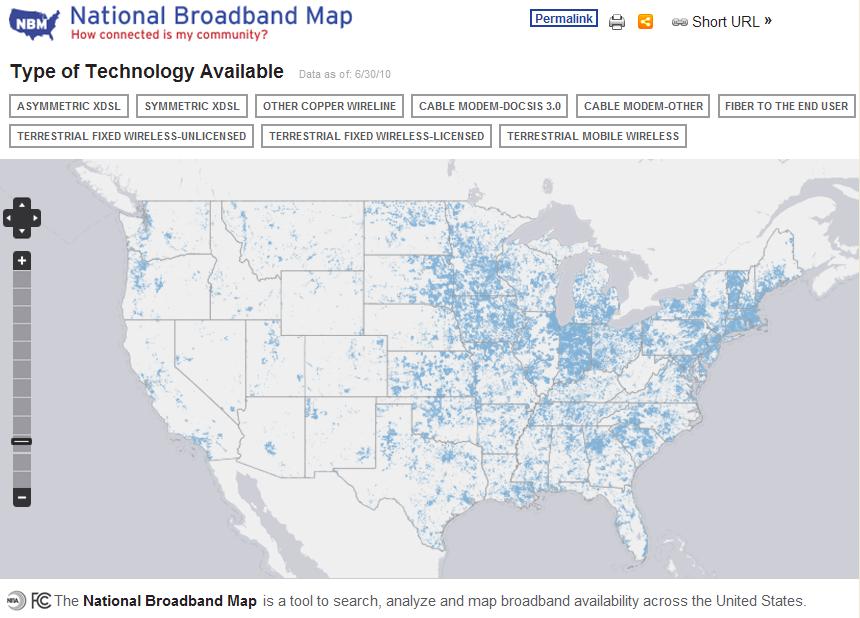

The U.S. National Broadband Map must be one of the most ambitious web mapping projects ever undertaken. For those unaware of the project it allows users to search broadband availability across the United States and compare real download speeds to advertised broadband performance. The map is part of a huge stimulus package to improve broadband coverage/speed across the U.S.A.

The U.S. National Broadband Map must be one of the most ambitious web mapping projects ever undertaken. For those unaware of the project it allows users to search broadband availability across the United States and compare real download speeds to advertised broadband performance. The map is part of a huge stimulus package to improve broadband coverage/speed across the U.S.A.

In this post I’ll explore two main components of the project: the technology and data driving it.

The Technology

Interestingly the entire software stack used to power the U.S. National Broadband Map is open source. The main components used were:

- PostgreSQL/PostGIS

- GeoServer

- GeoWebCache

- OpenLayers

I won’t go into too much detail as Directions Magazine did an interview with Juan Marín Otero, the lead geospatial architect. The main takeaway is that the architecture performed on the day of launch; they scaled the system to handle over 9,000 requests per second! Impressive. It’s great to see such highly visible and complex solutions delivered using open standards and technology.

The Data

As Juan Marín Otero stated in his interview, the real challenge however did not lie with the technological implementation but with the the data integration. Note: Most of the details I talk about here came from presentations at the NSGIC conference my colleague Drew Rifkin attended (presentations available here).

State Data Collection

To produce the national map, state government was funded ($293 million) to collect the data. The idea was the states would collect the data and then feed results back to the FCC who would integrate the datasets and present it in the U.S National Broadband Map.

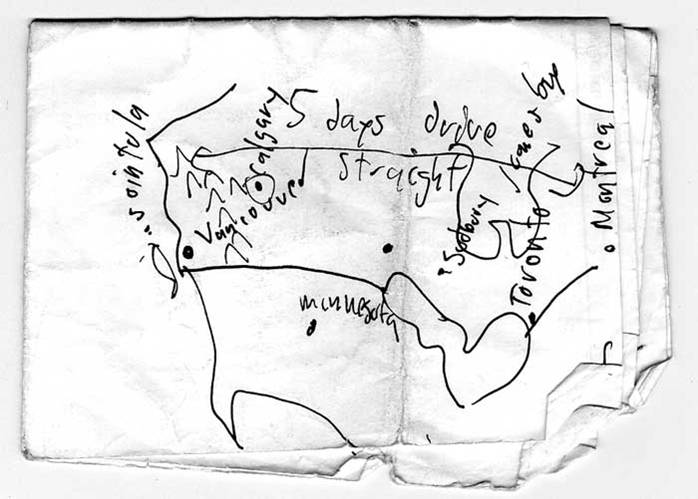

The first issue was how the states went about collecting the data. The broadband companies had the data the states needed to get their hands on. So the first task was to collate the data from every broadband company in the state. As Anne Neville highlighted in her presentation, this is not a trivial task, one broadband client presented the state with a Mapkin highlighting (right) where their customers were located (Not sure how you load that into PostGIS, maybe FME needs a new reader?).

Another problem was the structure of the data presented to them. The broadband companies delivered the data in many different formats and structures. They had to use spatial ETL to process, validate and then load the data into this standard data model. Another issue is that the states also had to display the information at the census block level, they therefore had to carry out a large amount of aggregation and smoothing to turn the point data into averaged area data.

Integration into the national database.

After all the states had collected their data the FCC ended up with data on 3500 broadband providers, submitted by 50 states, 2 territories and DC. The task at the federal level was to take all this data and load it into their PostGIS database. Once the import was completed they ended up with 1650 broadband providers and 25 million records.

Another interesting piece of the puzzle is that the data is obviously continuously changing. The blue coverage on the map represents areas which have broadband. The hope is that the map will increasingly turn blue as the stimulus money helps improve the broadband in the areas identified in the map as lacking. For everyone involved in the initial data collection, it means that updates need to be collected and loaded into both the state and national maps.

Is there anyone else out there who has been involved in a similar project for their country?